Notes: LRU cache implementation in Go

In this blog, we have implemented goroutine-safe LRU cache implementation in Go

Table of contents

No headings in the article.

LRU (Least Recently Used) is a common caching algorithm. This algorithm is used to keep track of recently used items while evicting the least recently used items when the cache is full. In our example, we'll implement a basic LRU cache implementation.

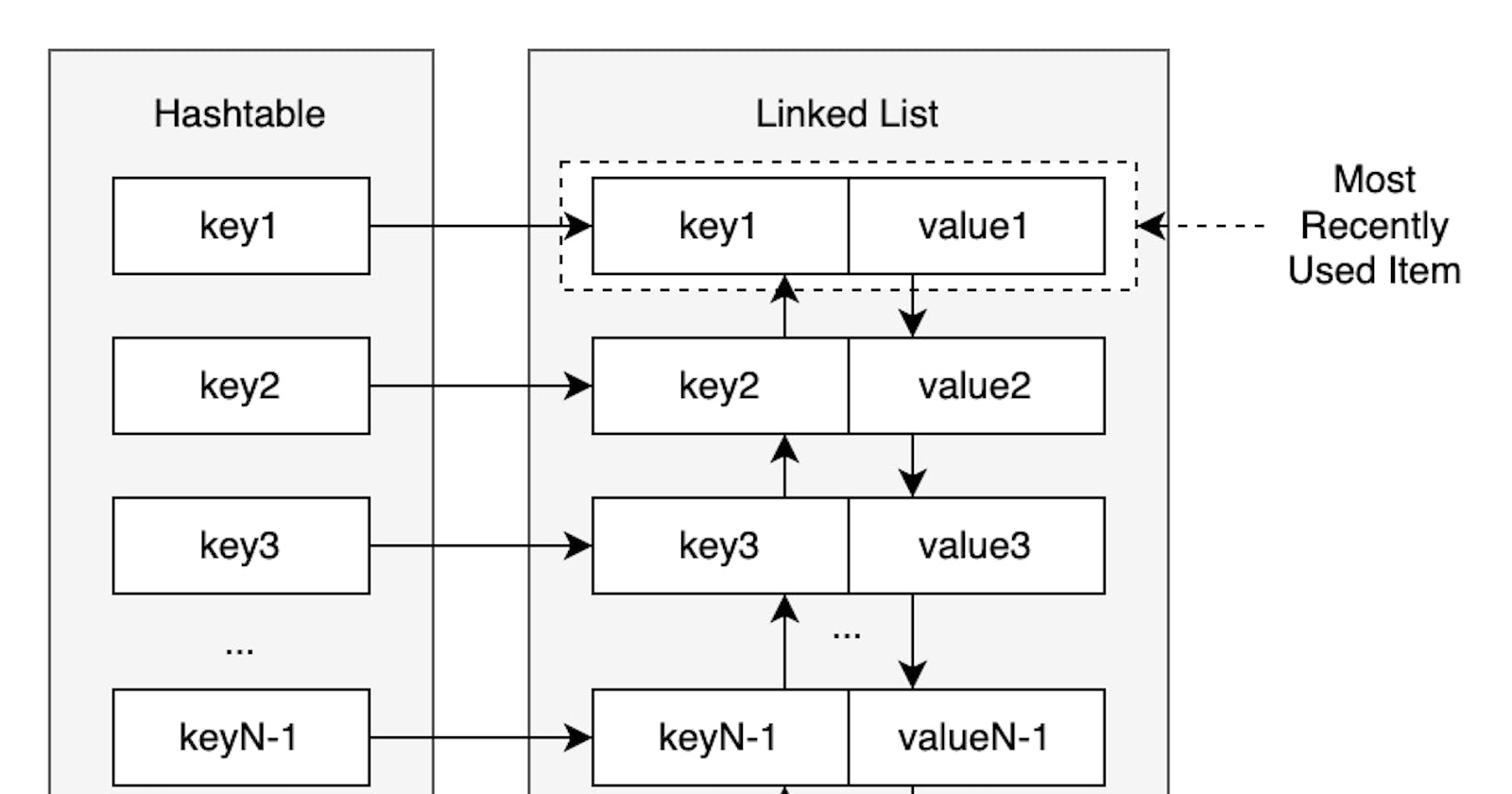

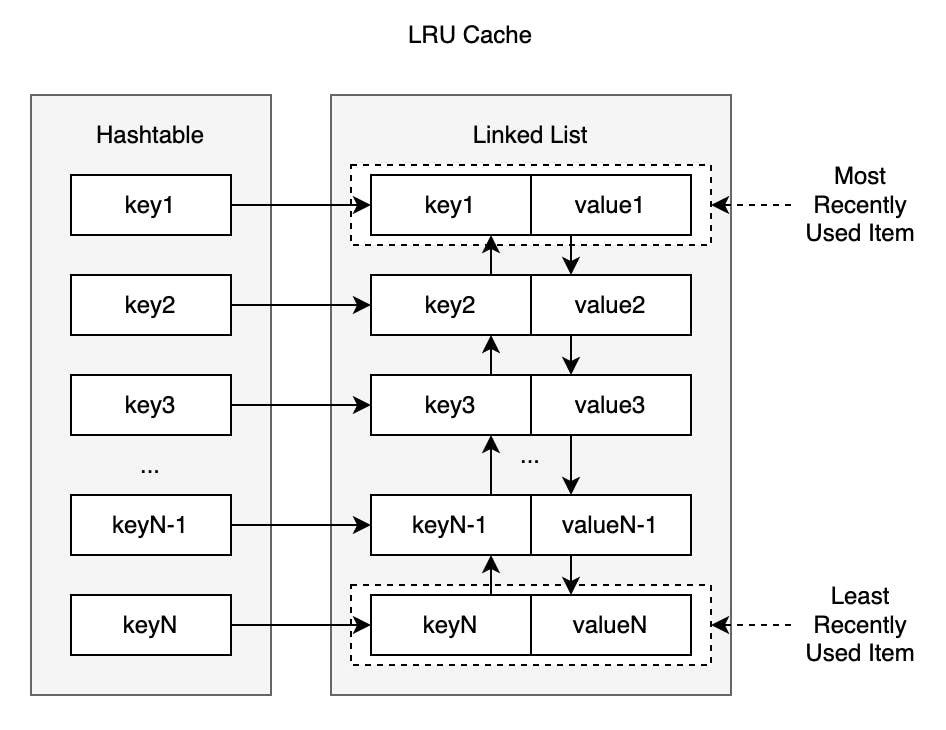

In LRU cache we use two data structures in conjunction, Map to reduce lookup time to O(1) while doubly-linked list to keep the cached objects in sequence and reduce eviction time to O(1) as it evicts tail element. We'll use a common lock for reading and writing on the cache to avoid dirty reads and writes.

Our cache object will contain key and value, key for the uniqueness of the record, and the value contains the actual required value to be cached.

type cacheKey int

type cacheValue interface{}

type CacheObject struct {

key cacheKey

value cacheValue

}

Now our LRU cache data structure will look like this. Note that we have added capacity as well to allow eviction on least recently used cached objects and maintain the fixed size. Here list is a doubly-linked list from the library (list), elements is the map that maps the cache object key with the address of the object cached in the list and lastly, the mutex is the common lock.

type LRUCache struct {

capacity int

list *list.List

elements map[cacheKey]*list.Element

mutex *sync.RWMutex

}

A simple diagram representing the mapping. Source: Roman Glushko Blog

Now that our cache object and LRU cache are defined, we'll have three functional methods to handle caching operations.

Get: In this method, we check ifkeyexists in the map. If it does, we return the value inO(1)time, else we return "cache key not found" error. And in case thekeywas there, we push the searched element to the top of ourlist.func (cache *LRUCache) Get(key cacheKey) (cacheValue, error) { cache.mutex.RLock() defer cache.mutex.RUnlock() elem, ok := cache.elements[key] if ok { value := elem.Value.(*CacheObject).value cache.list.MoveToFront(elem) return value, nil } return nil, fmt.Errorf("cache key not found") }Put: In this method, first we check if the key exists. If it does, we update the value/ element associated with thekeyand push that on top of thelist. If thekeyis not found, we first check if there is space left on the cache. If our cache is full, we evict the last element of thelistand remove it from the map as well. After that, we add our new element to the top of thelistand add a value in the map pointing to it.func (cache *LRUCache) Put(key cacheKey, value cacheValue) { cache.mutex.Lock() defer cache.mutex.Unlock() elem, ok := cache.elements[key] if ok { elem.Value = &CacheObject{ key: key, value: value, } cache.list.MoveToFront(elem) } else { if cache.list.Len() == cache.capacity { key := cache.list.Back().Value.(*CacheObject).key delete(cache.elements, key) cache.list.Remove(cache.list.Back()) } cacheObj := &CacheObject{ key: key, value: value, } pointer := cache.list.PushFront(cacheObj) cache.elements[key] = pointer } }Purge: This method basically resets the cache and creates a fresh new cache with old capacity.func (cache *LRUCache) Purge() { cache.list = new(list.List) cache.elements = make(map[cacheKey]*list.Element, cache.capacity) cache.mutex = &sync.RWMutex{} }You can check the below code with the expected results marked with comments

//. Try testing with different other versions as well to get a better understanding and maybe extend with your improvements. Complete working code:

package main

import (

"container/list"

"fmt"

"sync"

)

type cacheKey int

type cacheValue interface{}

// actual cache object to be stored in LRU cache

type CacheObject struct {

key cacheKey

value cacheValue

}

// capacity: maximum capacity of cache

// list: doubly linked list to store our cache objects

// elements: map to point elements of linked list based on "key"

// mutex: to enable locking the cache

type LRUCache struct {

capacity int

list *list.List

elements map[cacheKey]*list.Element

mutex *sync.RWMutex

}

func New(capacity int) (*LRUCache, error) {

if capacity == 0 {

return nil, fmt.Errorf("capacity cannot be 0")

}

return &LRUCache{

capacity: capacity,

list: new(list.List),

elements: make(map[cacheKey]*list.Element, capacity),

mutex: &sync.RWMutex{},

}, nil

}

// lock for writers, open for readers

// if cache key exists, returns the value associated with it

// and moves the accessed element to the front of the list

// if not, return error

func (cache *LRUCache) Get(key cacheKey) (cacheValue, error) {

cache.mutex.RLock()

defer cache.mutex.RUnlock()

elem, ok := cache.elements[key]

if ok {

value := elem.Value.(*CacheObject).value

cache.list.MoveToFront(elem)

return value, nil

}

return nil, fmt.Errorf("cache key not found")

}

// lock for all writers and readers

// if cache key exists, simply update the value abd move it to the front

// else, if max cache capacity is reached, delete last element from list and map

// and add new list element containing new cache object to the front of the list

// and add to the map

func (cache *LRUCache) Put(key cacheKey, value cacheValue) {

cache.mutex.Lock()

defer cache.mutex.Unlock()

elem, ok := cache.elements[key]

if ok {

elem.Value = &CacheObject{

key: key,

value: value,

}

cache.list.MoveToFront(elem)

} else {

if cache.list.Len() == cache.capacity {

key := cache.list.Back().Value.(*CacheObject).key

delete(cache.elements, key)

cache.list.Remove(cache.list.Back())

}

cacheObj := &CacheObject{

key: key,

value: value,

}

pointer := cache.list.PushFront(cacheObj)

cache.elements[key] = pointer

}

}

// purge all the cache and reinitializing everything except capacity

func (cache *LRUCache) Purge() {

cache.list = new(list.List)

cache.elements = make(map[cacheKey]*list.Element, cache.capacity)

cache.mutex = &sync.RWMutex{}

}

// prints all the objects in the list from head to tail

func (cache *LRUCache) Print() {

currentElem := cache.list.Front()

if currentElem == nil {

fmt.Println("nothing to show")

return

}

for {

if currentElem.Next() == nil {

fmt.Printf("Key: %d, Value: %+v\n", currentElem.Value.(*CacheObject).key, currentElem.Value.(*CacheObject).value)

break

}

fmt.Printf("Key: %d, Value: %+v\n", currentElem.Value.(*CacheObject).key, currentElem.Value.(*CacheObject).value)

currentElem = currentElem.Next()

}

fmt.Println()

}

func main() {

lru, _ := New(5)

lru.Put(1, "hello") // 1

lru.Put(2, 20) // 1 -> 2

lru.Put(3, 4.5) // 1 -> 2 -> 3

lru.Print()

fmt.Println(lru.Get(1)) // 2 -> 3 -> 1

fmt.Println(lru.Get(3)) // 2 -> 1 -> 3

fmt.Println()

lru.Print()

lru.Put(1, true) // 2 -> 3 -> 1

lru.Print()

lru.Put(4, "world") // 2 -> 3 -> 1 -> 4

lru.Put(5, false) // 2 -> 3 -> 1 -> 4 -> 5

lru.Put(6, "drops 2 => 20") // 3 -> 1 -> 4 -> 5 -> 6

lru.Print()

lru.Purge()

lru.Print()

fmt.Println()

lru.Put(1, "back") // 1

lru.Put(2, 2) // 1 -> 2

lru.Put(3, true) // 1 -> 2 -> 3

lru.Print()

}